1. Introduction

This document is a user-guide for the Live Objects IoT service.

For any question / comment / improvement regarding this document please send us an email at contact: support@support-liveobjects.atlassian.net

2. Overview

2.1. What is Live Objects?

Live Objects is a Software as a Service belonging to IoT & Data Analytics services.

Live Objects is a software suite for IoT / M2M solution integrators offering a set of tools to facilitate the interconnection between devices (or connected « things ») and business applications:

-

Connectivity Interfaces (public and private) to collect data, send command or notification from/to IoT/M2M devices,

-

Device Management :inventory, supervision, configuration, resources, campaign management…,

-

Data Management and collect: Data Storage with Advanced Search features, but also: Message enrichment process, Decoding service and custom pipelines for external enrichment,

-

Message Routing between devices and business applications,

-

Event processing: Alarming with an event detection service by analyzing the data flows sent by devices or occurring on the devices themselves. When these events take place, Live Objects has notification mechanisms to complete the process.

The public interfaces are reachable from internet. The private interfaces provide interfaces with a specific network (LoRa®).

The SaaS allows multiple tenants on the same instance without possible interactions across tenant accounts (i.e. isolation, for example a device belonging to one tenant could not communicate with a device belonging to an other tenant).

A web portal provides a UI to administration functions like manage the messages and events, supervise your devices and control access to the tenant.

2.2. Architecture

Live Objects SaaS architecture is composed of two complementary layers:

-

Connectivity layer: manages the communications with the client devices and applications,

-

Service layer: various modules supporting the high level functions (device management, data processing and storage, etc.).

2.3. Connectivity layer

Live Objects exposes a set of standard and unified public interfaces allowing to connect any programmable devices, gateway or functional IoT backend.

The existing public interfaces are:

MQTT is an industry standard protocol which is designed for efficient exchange of data from and to devices in real-time. It is a binary protocol and MQTT libraries have a small footprint.

HTTPS could be rather used for scarcely connected devices. It does not provide an efficient way to communicate from the SaaS to the devices (requiring periodic polling for example).

The public interfaces share a common security scheme based on API keys that you can manage from Live Objects APIs and web portal.

Live Objects is fully integrated with a selection of devices and network. It handles communications from specific families of devices, and translate them as standardized messages available on Live Objects message bus.

The existing private interfaces:

-

LoRa® interface connected with LoRa® network server,

-

to provision LoRa® devices

-

to receive and send data from/to LoRa® devices

-

Also, it may be possible that your devices are not complient with Live Objects interfaces. It is still possible to use the API for "external connector" feature:

"External connector" mode : a good fit for connect your custom backend who manage your devices and when your devices don’t support Live objects connectivity. This mode has an MQTT API described in mode "External connector".

2.4. Service layer

Behind the connectivity layers for devices, Live Objects is a platform with several, ready to use services to enhanced you IoT potential.

On the one hand, the data collect part with various services: internal enrichment, decoding and custome pipelines for external enrichment services.

On the other hand, these data can be publish into a FIFO and consume using MQTT "application" mode.

-

FIFO : the solution to prevent from message loss in the case of consumer unavailability. Messages are stored in a queue on disk until consumed and acknowledged. When multiple consumers are subscribed to the same queue concurrently, messages are load-balanced between available consumers. More info: FIFO mode,

-

Application mode : a good fit for real-time exchanges between Live Objects backend and your business application. Data messages exchanged are queued in the FIFO queuing bus mechanism mode "Application".

2.4.1. Device management

Live Objects offers various functions dedicated to device operators:

-

supervise devices and manage connectivity,

-

manage devices configuration parameters,

-

send command to devices and monitor the status of these commands,

-

send resources (any binary file) to devices and monitor the status of this operation.

Live Objects attempts to send command, resources or update the parameters on the device as soon as the device is connected and available.

2.4.2. Data management

2.4.2.1. Data transformation

Live Objects can transform the published messages by using data enrichment, message decoding and external datamessage transformation by using custom pipelines.

2.4.2.2. Custom pipeline tutorial video

2.4.2.3. Data analysis and display

Live Objects allows to store the collected data from any connectivity interfaces. These data could be then retrieved by using HTTPS REST interface.

A full-text search engine based on Elastic search is provided in order to analyze the data stored. This service is accessible through HTTPS REST interface.

2.4.3. Messages and Events Processing

2.4.3.1. SEP, SP and AP

Simple event processing service is aimed at detecting notable single event from the flow of data messages.

Based on processing rules that you define, it generates fired events that your business application can consume to initiate downstream action(s) like alarming, execute a business process, etc.

In the other hand, Live Objects allows to process the Activity processing (AP) service which aims at detecting devices that have no activity.

The State processing (SP) service aims at detecting changes in "device state" computed from data messages.

2.4.3.2. Routing and Alarming

The FIFO mode communication is based on the usage of topics to publish a messages with ensuring no message loss. It can forward or route messages to recipients without loss using FIFO Publication and FIFO subscription. You can also use the HTTP push forwarding after the message is processed by event processing service or message routing service. Those services give a notifications or give an alarm to the recipients on purpose.

2.5. Access control

2.5.1. API keys

API keys are used to control the access to the SaaS for devices/application and users to authenticate. You must create an API key to use the API.

2.5.2. Users management

When an account is created, a user with administration priviledges is also created on the account. This administrator can add other users to the account and set their priviledges. These priviledges are defined by a set of roles. The users can connect to the Live Objects web portal.

2.6. Edge computing

In an advanced usage, you have some ways to integrate the IOT edge plateform which can collect, manage and store the business data. But more use cases need treatments close to devices to provide :

-

Local treatment with low latency and independency

-

interoperability with industrial protocols

-

Secure approach with keeping locally the business and critical data

2.6.1. Illustration video

3. Getting started

This chapter is a step-by-step manual for beginners with Live Objects giving instructions covering the basic use cases of the service. Here is a small process to begining with Live objects and help you to easy building a use case and end-to-end test.

-

If you have device you can starting to use it.

-

If you do not have a device, browse the instructions in this guide to build a usage that uses only simulators to replace a device. You can use your own device simulator and use MQTT or COAP protocol to connect your virtual device to Live Objects.

Requirements : the data message sent by device (or simulator) must respect the Live Objects data model.

3.1. Log in

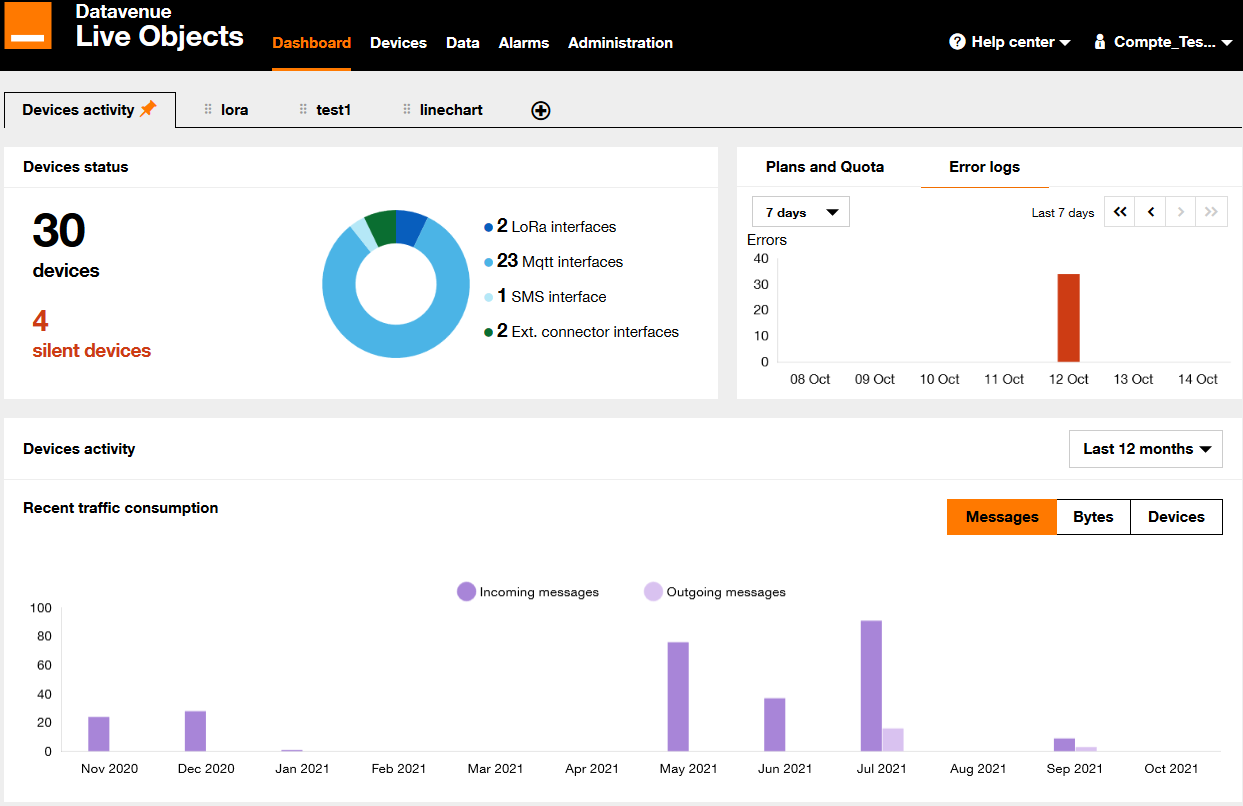

To log in to Live Objects web portal, connect to liveobjects.orange-business.com using your web-browser:

-

Fill the Sign in form with your credentials:

-

your login,

-

the password set during the activation phase,

-

-

then click on the Sign in button.

You are redirected to your “Dashboard” page:

3.2. Connect and test your LoRa® device

LoRa® devices need to be registered on Live Objects to send messages.

| To quick register your LoRa® device, use the Live Objects portal, liveobjects.orange-business.com. |

3.2.1. Register your device

To register a LoRa® device, go to the Devices menu, select LoRa® in the list and click on Add device.

In the form, select the profile that corresponds to your device. If you do not know, select Generic_classA_RX2SF12. Enter your device’s DevEUI, AppEUI and AppKey. Depending on the LoRa® device you are using, these parameters are pre configured and they should come with the device, or you can configure the device resource with your own parameters. Then click on Register.

You can see your device in the Devices menu.

3.2.2. Connect your LoRa® device

When the device is registred then join LoRa® network and it would automatically connected to Live Objects. The device is now ready to send uplink data and begining to publish the data messages.

| When you prefer using an API, see Register a LoRa® device |

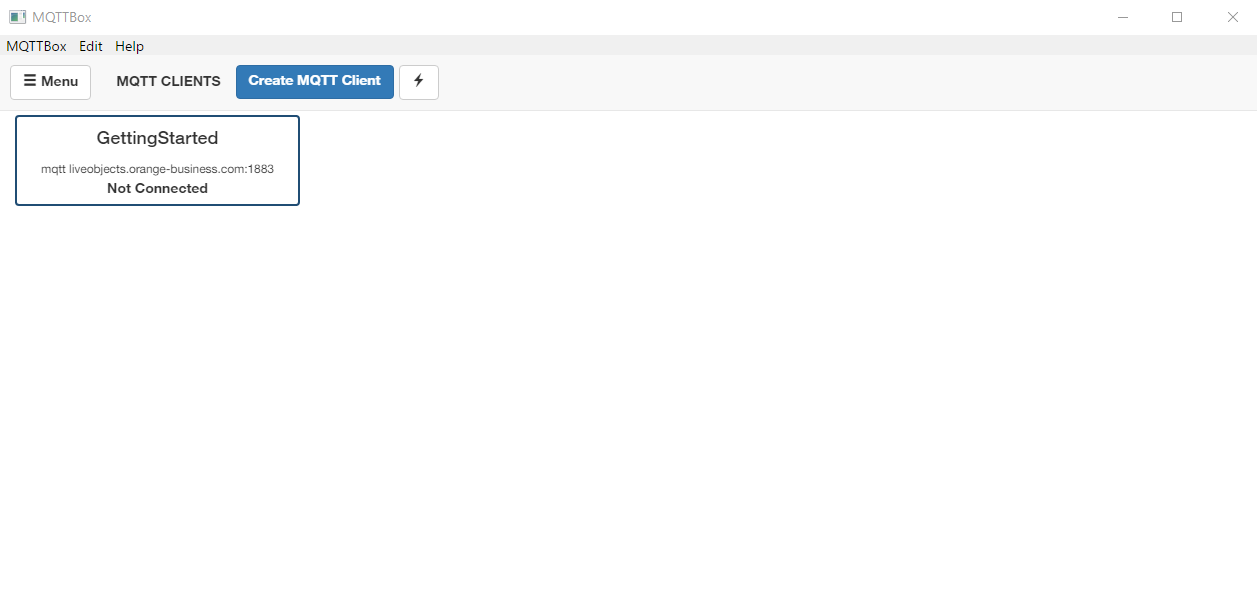

3.3. Simulating an MQTT device

To make a device or an application communicate with Live Objects using MQTT, you will need an API key.

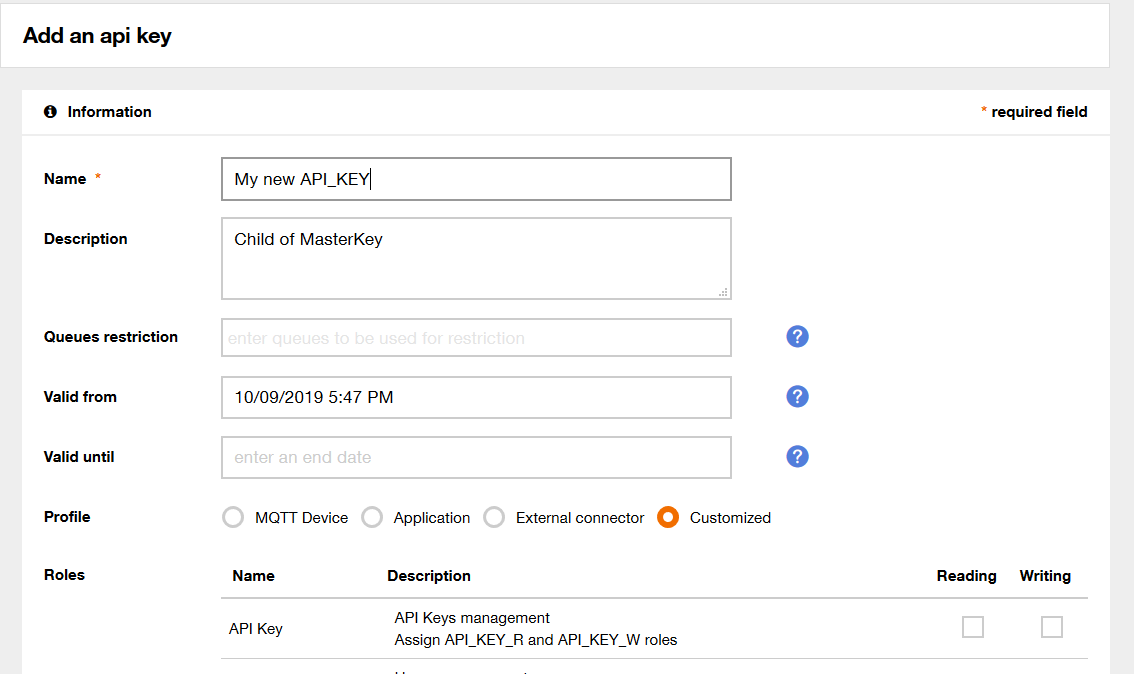

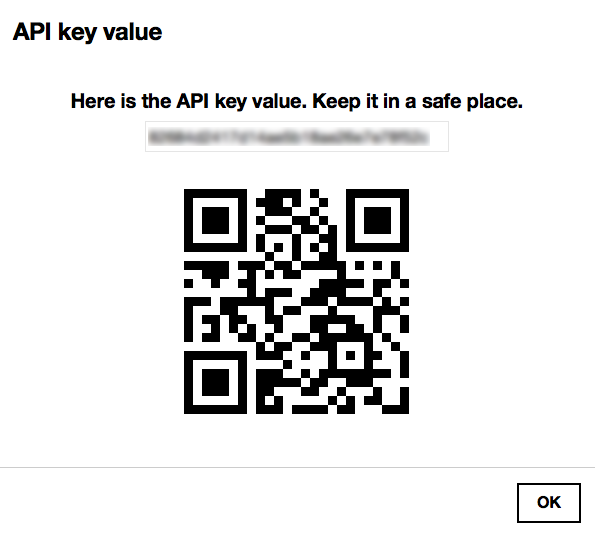

3.3.1. Create an API key

To create an API key, go to the Configuration menu, click on Api keys in the left menu and create a new API key. Select at least the role "DEVICE_ACCESS".

As a security measure, you can not retrieve the API key again after you have closed the API key creation results page. So, note it down to work with the MQTT client, during the scope of this getting started.

3.3.2. Download your simulator tool

It is up to you to choose your favorite MQTT client or library. We will use here MQTT.fx. This client is available on Win/MacOSX/Linux and is free (until now). Download and install the last version of MQTT.fx.

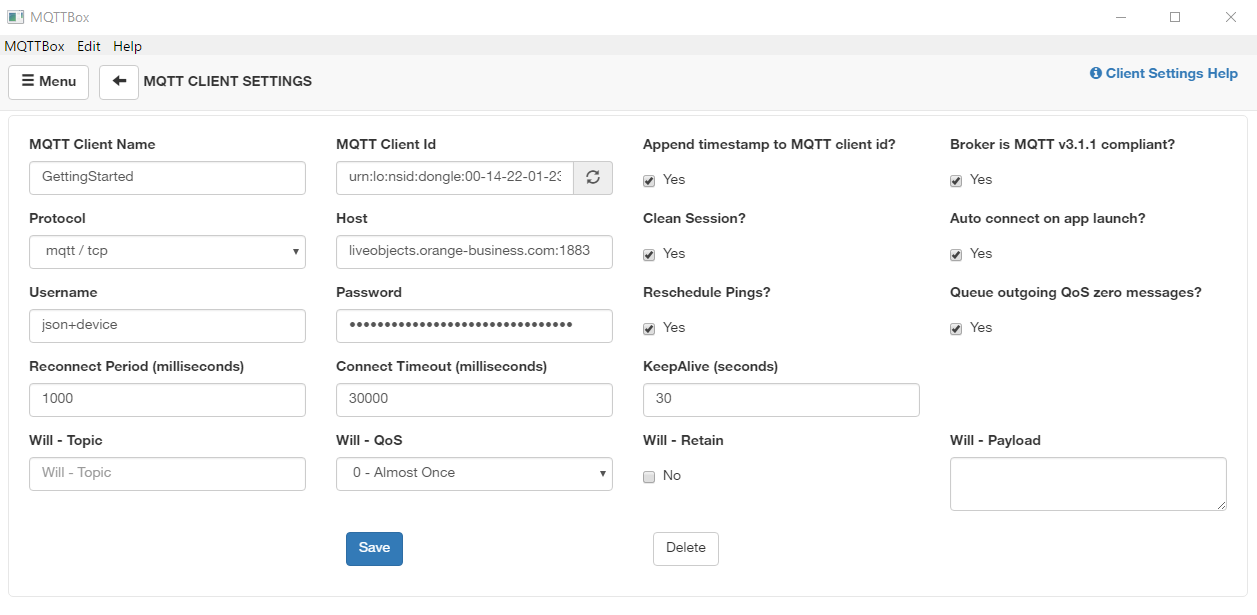

3.3.3. Connect your simulator

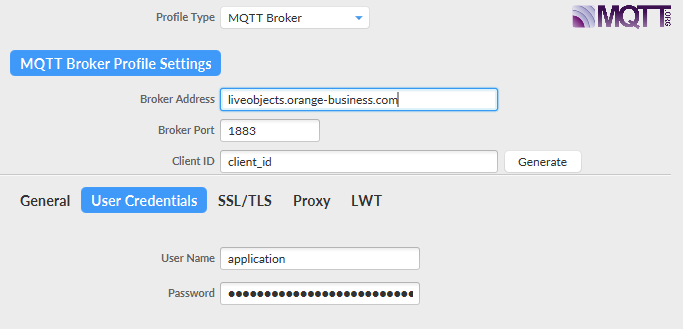

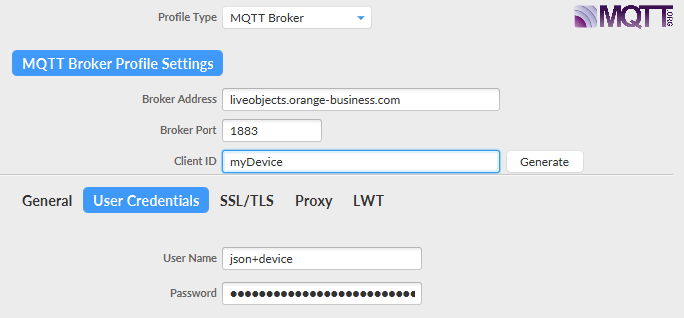

We will start by creating a new Connection profile and configure it based on the device mode set up.

General panel:

You will configure here the endpoints of Live Objects including authentication information. In this panel, you can set :

-

Broker Address with mqtt.liveobjects.orange-business.com

-

Broker Port with 8883

-

Client ID with urn:lo:nsid:dongle:00-14-22-01-23-45 (as an example), More information about Client Id format in the devices section

-

Keep Alive Interval with 30 seconds

Credentials panel:

-

username: json+device : for device mode MQTT connection

-

password: the API key that you just created with the "DEVICE_ACCESS" role

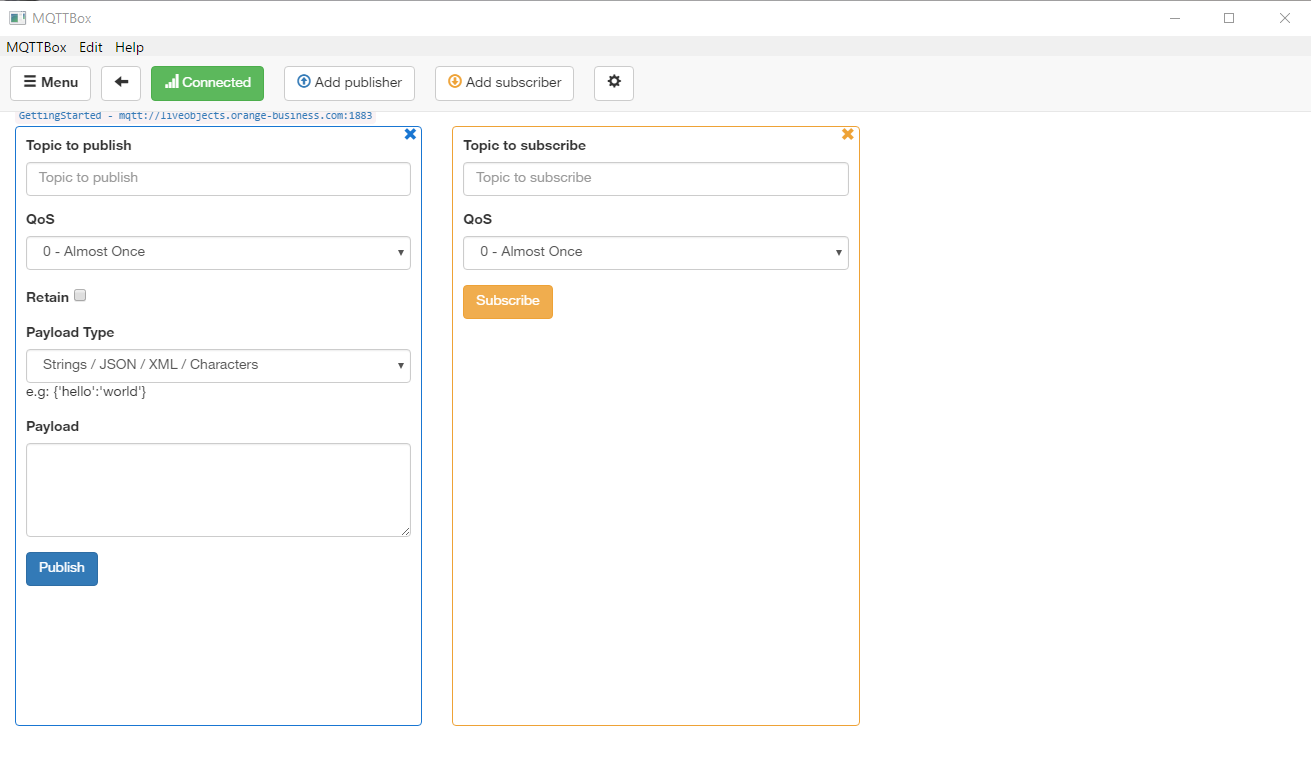

3.3.4. Verify status

We can simulate a device connection to Live Objects with MQTT.fx client by clicking on Connect button of MQTT.fx client.

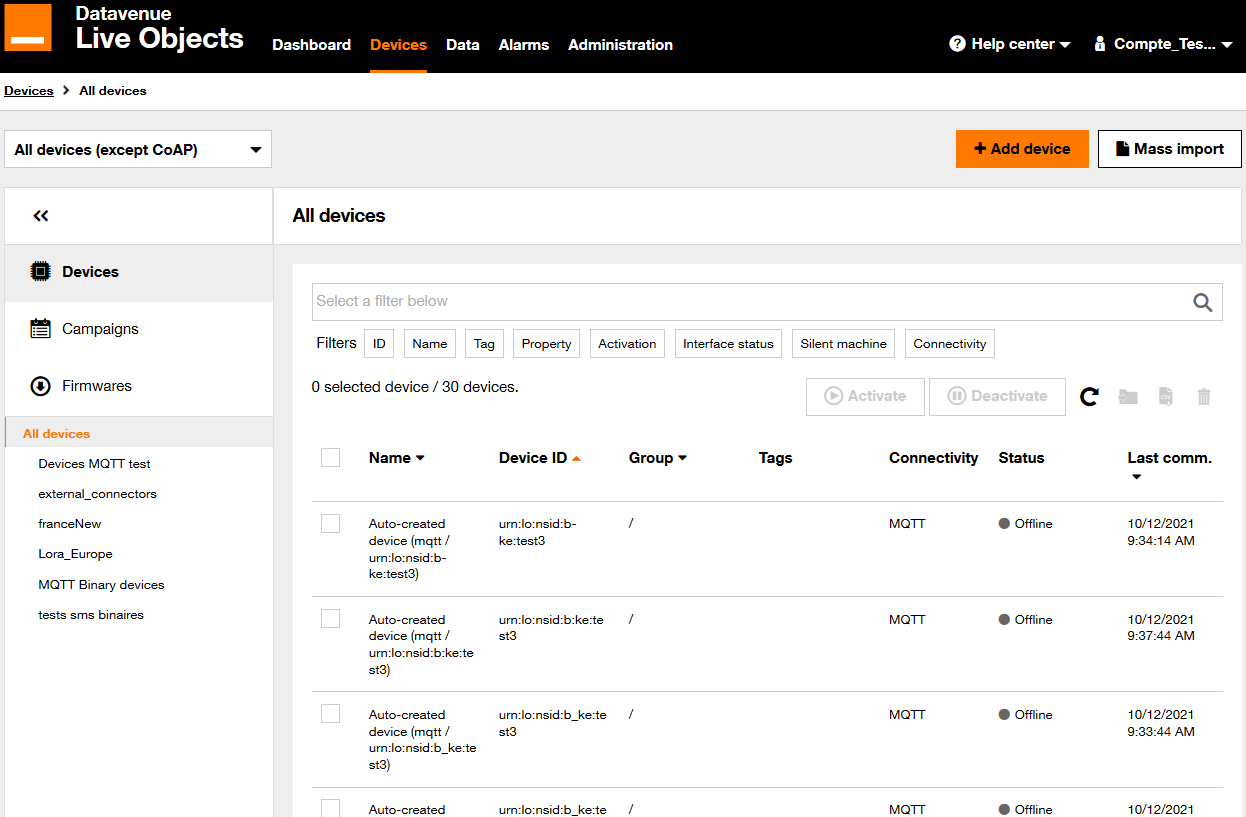

In Live Objects portal, go to the Devices page, the connected device has "green" status.

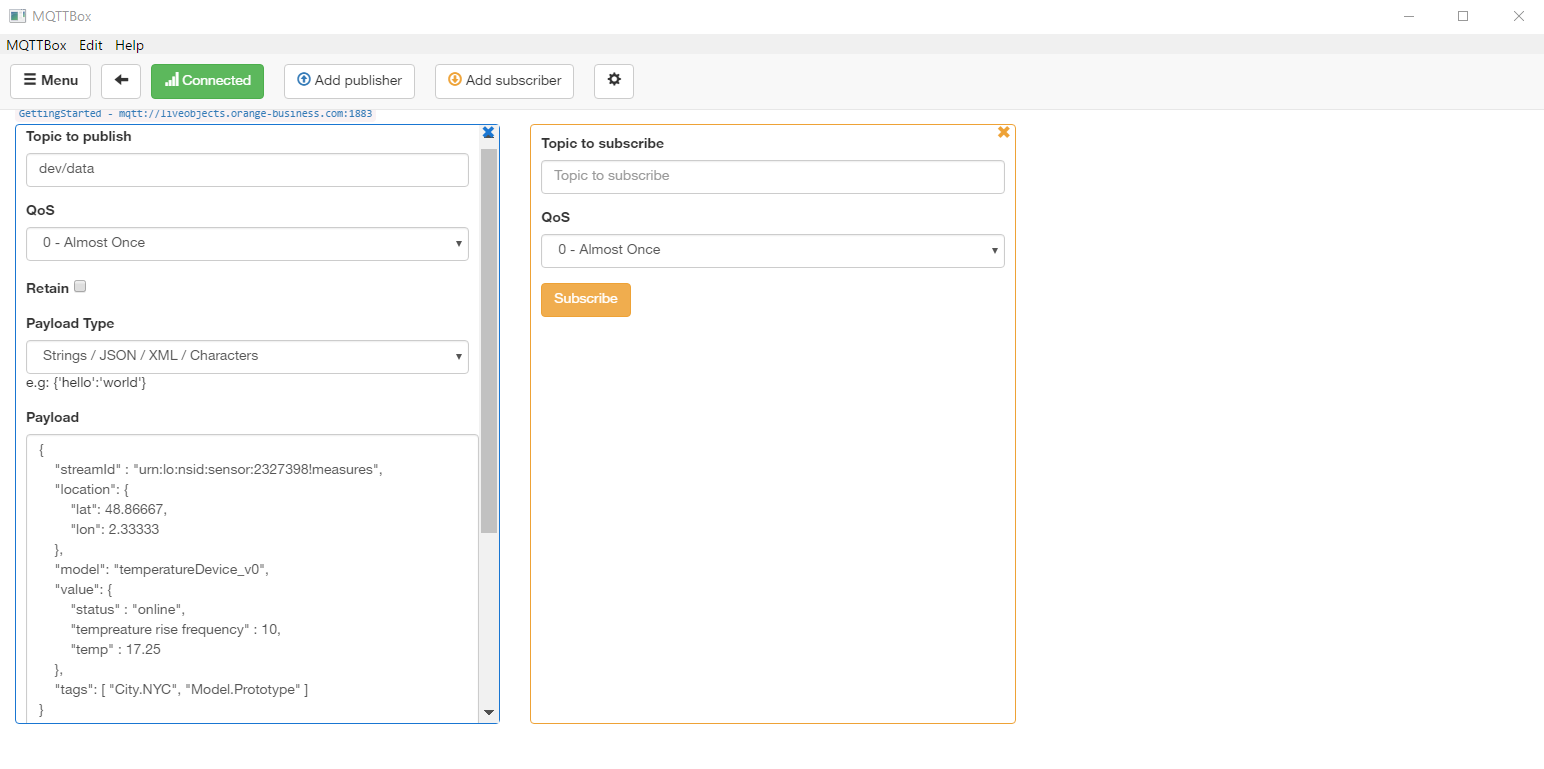

3.3.5. Publishing data messages

We will use MQTT.fx client with device mode to send data message as a device would do.

Data message must be published on this topic: dev/data.

Message:

{

"streamId" : "urn:lo:nsid:dongle:00-14-22-01-23-45!measures",

"location": {

"lat": 48.86667,

"lon": 2.33333

},

"model": "temperatureDevice_v0",

"value": {

"status" : "online",

"tempreature rise frequency" : 10,

"temp" : 17.25

},

"tags": [ "City.NYC", "Model.Prototype" ]

}With:

-

streamId: the streamId of the message, the message will be forwarded to a topic containing the streamId so an application connected to Live Objects can retrieve messages

-

timestamp: the timestamp of the message, this field is optionnal, if not set by the device, Live Objects will set it with the time of arrival,

-

location: the location of the device, this field is optionnal,

-

model: the model of data contained in the v field, (more about data model)

-

value: the value, this is a free field to store device specific data such as the device sensor values,

-

tags: a list of tags, this field is optionnal.

3.3.6. Tutorial videos

3.4. Device Management basics

3.4.1. Sending a command to a LoRa® device

Messages sent by LoRa® devices to Live Objects are called uplinks. Messages sent by Live Objects to LoRa® devices are called downlinks.

To send a command, go to Devices then select your LoRa® device in the list and go to Downlink tab. Click on Add command, enter a port number between 1 and 223 and enter data in hexadecimal, 0A30F5 for example. The downlink will be sent to your device after the next uplink.

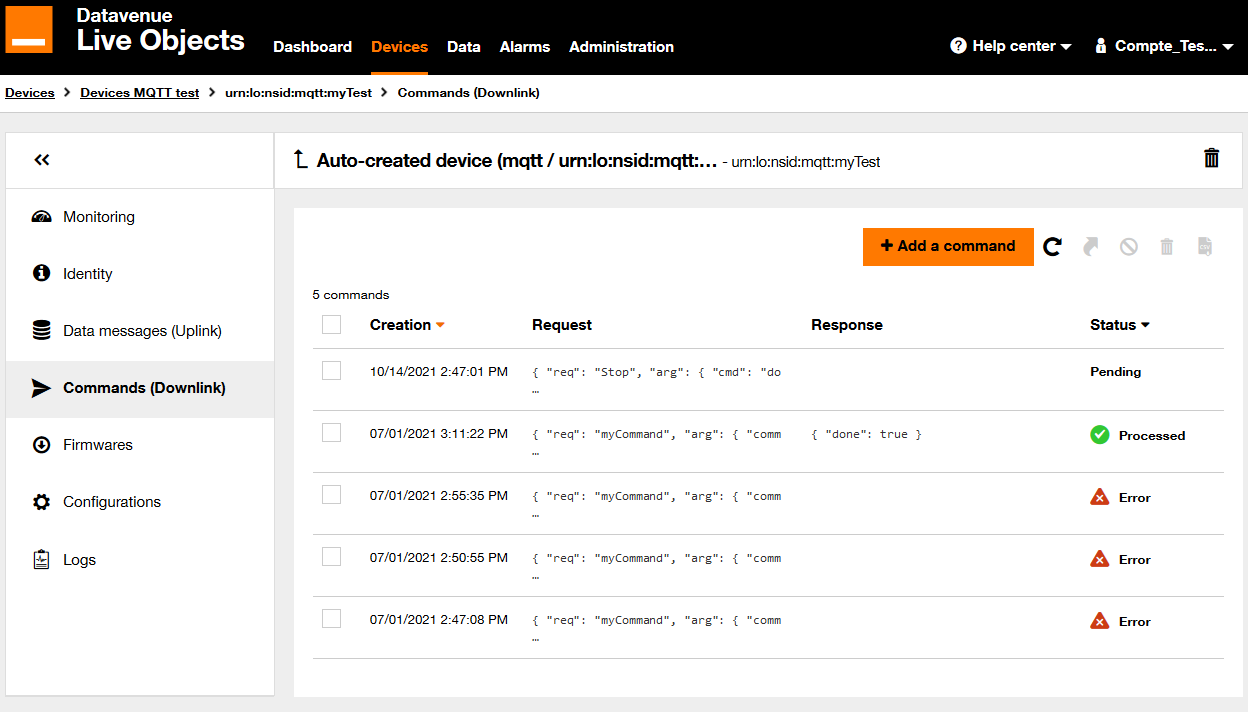

3.4.2. Sending a command to an MQTT device

You must first subscribe to the topic waiting for command "dev/cmd". (Subscribe tab of MQTT.fx)

Go to Devices then select your device in the list and go to Commands tab.

Click on add command then fill the event field with "reboot" then click on Register. The command will appear in MQTT.fx client subscribe tab.

command request

{

"req":"reboot",

"arg":{},

"cid":94514847

}

A response can be sent to acknowledge the command received.

To send this response, you can publish a message to this topic "dev/cmd/res". (correlation identifier) must be set with the value received previously.

command response

{

"res": {

"done": true

},

"cid": 94514847

}Once published, the status of the command will change to "processed" in the portal commands history tab.

| A correlation ID uniquely identifies each ‘request’, or the equivalent and used in your intercation with Live Objects API. |

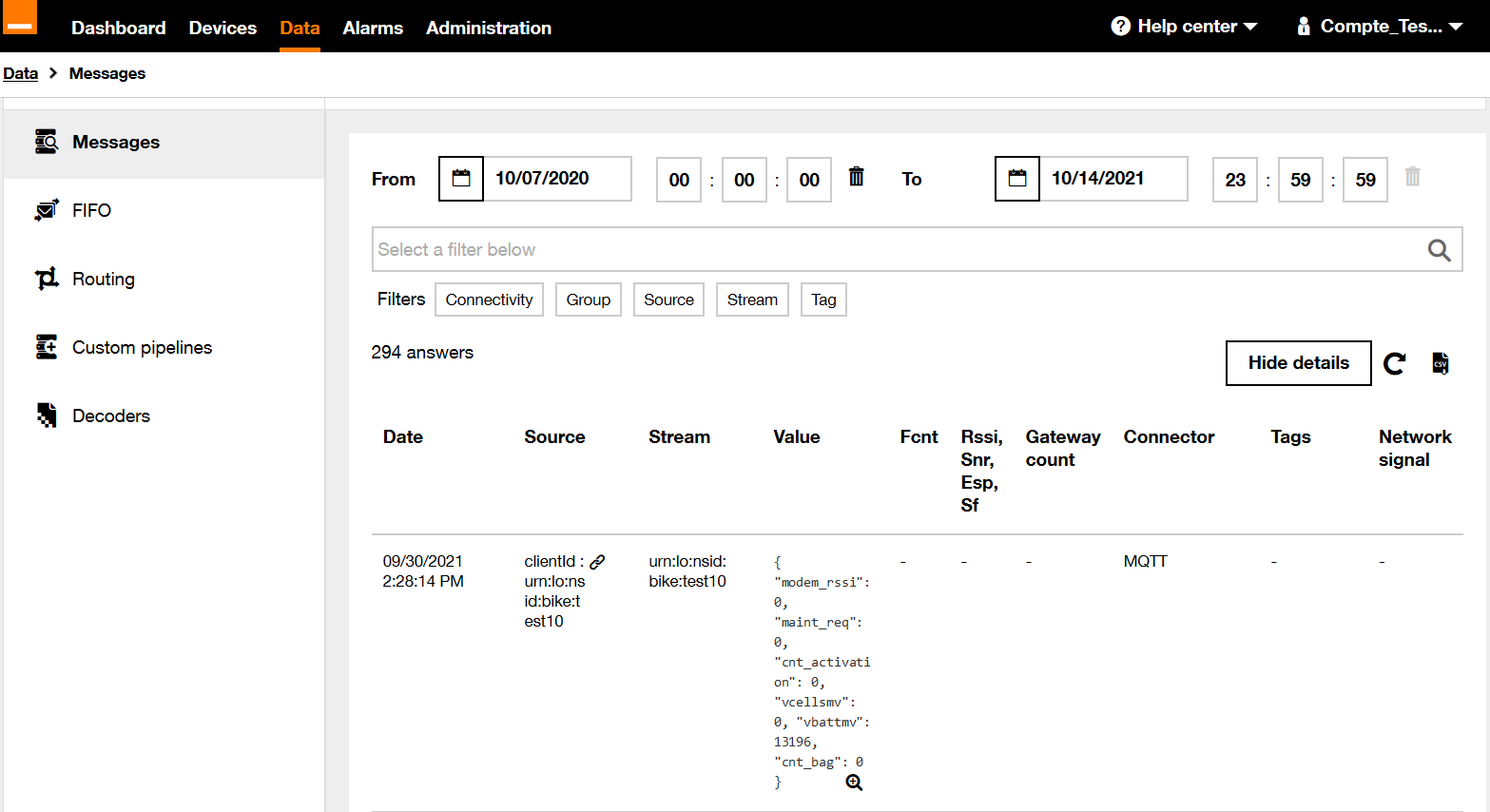

3.5. Accessing the stored data

There are several ways to access you device’s data:

-

Going back to Live Objects portal, you can consult the data message that was just stored. Go to Data then search for stream "urn:lo:nsid:dongle:00-14-22-01-23-45!temperature". The data message sent will appear.

-

You can access data from the HTTPS interface.

-

You can perform complex search queries like aggregation using elasticsearch DSL HTTPS interface. See example in See example in Data API chapter

-

Through the MQTT interface, either your configured FIFO queue or your connected MQTT client will receive data messages.

3.6. Connect an application to collect your devices data

3.6.1. Using HTTPS to retrieve your devices' data

Data from you device can be retrieved using HTTPS API.

You will need an API key with the DATA_R role. You can create it as you did in the previous part.

The following endpoint enables you to retrieve data from a stream: https://liveobjects.orange-business.com/api/v0/data/streams/{streamId}

Example with a MQTT device:

GET /api/v0/data/streams/urn:lo:nsid:dongle:00-14-22-01-23-45!temperature Host: https://liveobjects.orange-business.com Header: X-API-KEY: <a valid API key with DATA_R role>

Example with a LoRa® device:

GET /api/v0/data/streams/urn:lo:nsid:lora:<DevEUI> Host: https://liveobjects.orange-business.com Header: X-API-KEY: <a valid API key with DATA_R role>

3.6.2. Using MQTTs to retrieve your device’s data

Data published by your devices can be consumed using the MQTT protocol.

You will need an API key with the BUS_R role. You can create it as you did in a previous part.

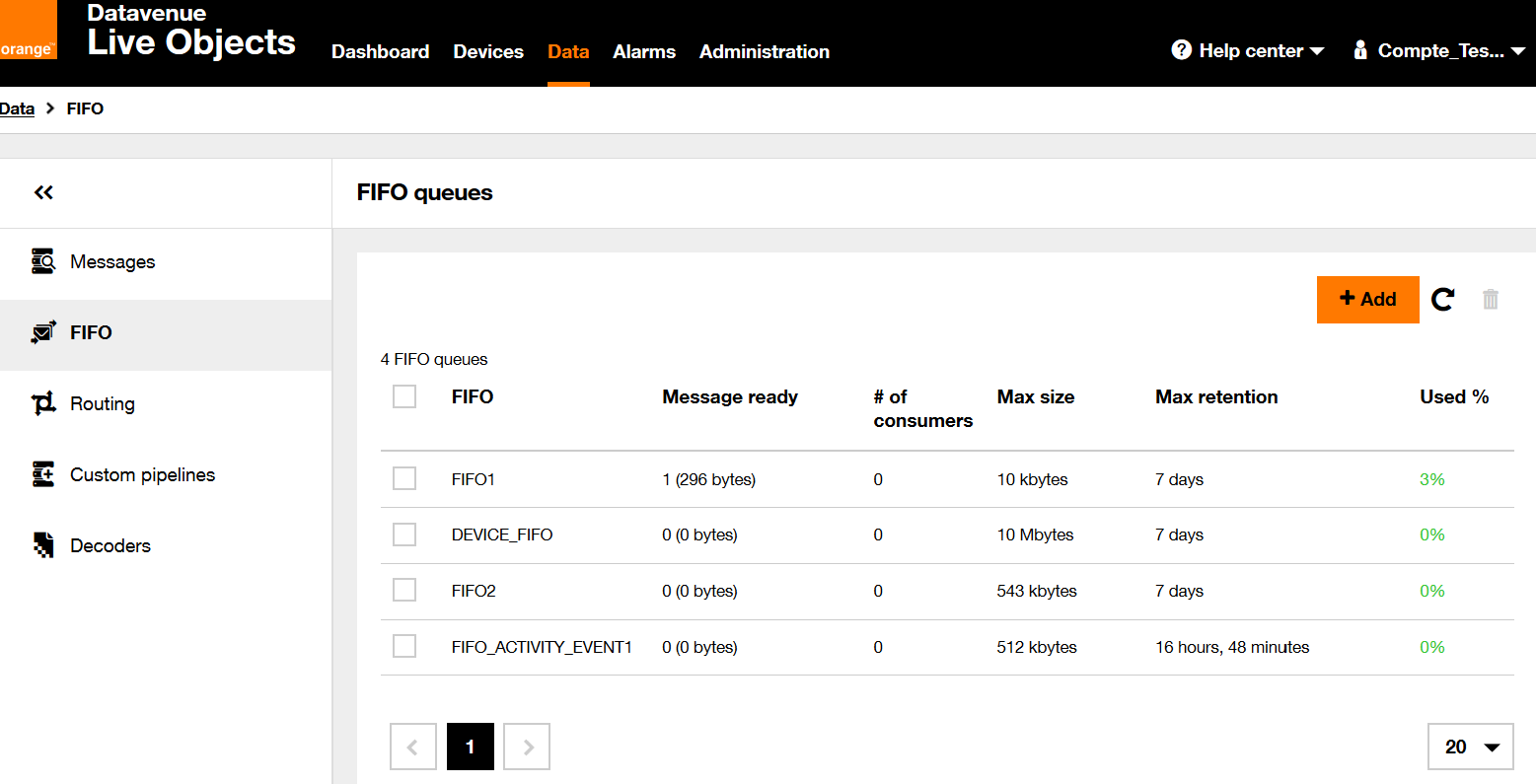

Then configure a FIFO queues to persist your device’s data until you consume them. To do this, you will have to create a FIFO queue and then route your device’s data to the queue.

In the Data menu, go to the FIFO tab and click on the add a FIFO queue button In the pop-in enter a name myFifo for your FIFO queue, then press Register button: the newly created FIFO queue myFifo is now listed.

The FIFO queue is ready to persist data. To make your device’s data enter the queue, you have to route them to the queue. More information about routing in the section FIFO publish action.

Future messages sent by your device will be stored in the FIFO queue until an MQTT client subscribes to the queue.

The "application" mode purpose is to retrieve messages available in your FIFOs.

Available MQTT endpoints are described in the section Endpoints.

Beforehand, a connection must be established as follows :

-

clientId: ignored

-

username: application

-

password: a valid Live Objects API key with a BUS_R role

-

willRetain, willQoS, willFlag, willTopic, willMessage: ignored

Then a subscription to the following topic to consume data from your FIFO : "fifo/myFifo".

3.6.3. Tutorial video

3.7. Connect a device without a supported protocols

If you have a fleet of devices that do not support traditional protocols or that are connected to a custom backend, you can use the external connector mode:

-

Connect your specific devices with this mode

-

The external connector which uses the MQTT protocol, allows for all your data to be collected in a single place.

-

Monitor your devices connectivity

-

Configure your devices from Live objects platform

-

Receive command from Live Objects platform

-

Update your devices resource and configuration

3.7.1. Tutorial video

4. Account Management and Access Control

4.1. Tenant account

A tenant account is the isolated space on Live Objects dedicated to a specific customer: every interaction between Live Objects and an external actor (user, device, client application, etc.) or registered entities (user accounts, API keys, etc.) is associated with a tenant account.

Live Objects ensures isolation between those accounts: you can’t access the data and entities managed in another tenant account.

Each tenant account is identified by a unique identifier: the tenant ID.

A tenant account also has a name, that should be unique.

4.2. User account

A User Account represents a user identity, that can access the Live Objects web portal.

A user account is identified by a login. A user account is associated with one or many roles. A user can authenticate on the Live Objects web portal using a login and password.

When user authentication request succeeds, a temporary API key is generated and returned, with same roles as the User account.

In case of too many invalid login attempts, the user account is locked out for a while.

For security purpose, a password must be at least 12 characters including at least 1 uppercase letter, 1 lowercase letter, 1 special character and 1 digit.

4.3. API key

A Live Objects API key is a secret that can be used by a device, an application, or a user to authenticate when accessing to Live Objects on the MQTT or HTTPS/REST interfaces. At least one API key must be generated. As a security measure, an API key can not be retrieved after creation (As describe in this section), .

An API key belongs to a tenant account: after authentication, all interactions will be associated only to this account (and thus isolated from other tenant accounts).

An API key can have zero, one or many Roles. These roles allows to restrict the operations that could be performed with the key.

An API key validity can be limited in time.

Creating a tenant account automatically attribute a "master" API key.

That API key is special: it can’t be deleted.

An API key can generate child-API keys that inherit (a subset of) the parent roles and validity period.

An API key can be restrained to one or more message queues:

-

If one or more message queues are selected, the API key can access only these queues.

-

If no message queue is selected, the API key has no restriction and can access any queue.

Thus, the API key can only be used in MQTT access limited to these selected message queues. This for example, makes it possible, after having oriented the right device to the right queue, to restrict access to the data of specific devices.

Usage:

-

In MQTT, clients must connect to Live Objects by using a valid API key value in the password field of the (first) MQTT « CONNECT » packet,

-

In case of unknown API key value, or invalid, the connection is refused.

-

On success, all messages published on this connection will be enriched with the API key id and roles.

-

-

In HTTPS, clients must specify a valid API key value as HTTP header X-API-Key for every request,

-

In case of unknown API key value, request is refused (HTTP status 403).

-

In case of invalid API key value, request is refused (HTTP status 401).

-

On success, all messages published due to this request will be enriched with the API key id and roles.

-

4.4. Role

A Role is attributed to an API key or User Account. It defines the privileges on Live Objects. A Role is attributed to an API key or User Account.

|

Important Notice : Some features are only available if you have subscribed to the corresponding offer, so you may have the proper roles set on your user but no access to some features because these features are not activated on your tenant account (check the tenant offer). The currently available roles and their inclusion in Admin or User profiles: |

| Role Name | Technical value | Admin profile | User profile | Priviledges |

|---|---|---|---|---|

API key |

API_KEY_R |

X |

X |

Read parameters and status of an API key. |

API key |

API_KEY_W |

X |

X |

Create, modify, disable an API key. |

User |

USER_R |

X |

X |

Read parameters and status of a user. |

User |

USER_W |

X |

Create, modify, disable a user. |

|

Settings |

SETTINGS_R |

X |

X |

Read the tenant account custom settings. |

Settings |

SETTINGS_W |

X |

X |

Create, modify tenant account custom settings. |

Device |

DEVICE_R |

X |

X |

Read parameters and status of a Device management. |

Device |

DEVICE_W |

X |

Create, modify, disable a Device management, send command, modify config, update resource of a Device. |

|

Device Campaign |

CAMPAIGN_R |

X |

X |

Read parameters and status of a massive deployment campaign on your Device Fleet. |

Device Campaign |

CAMPAIGN_W |

X |

Create, modify a campaign on your Device Fleet. |

|

Data |

DATA_R |

X |

X |

Read the data collected by the Store Service or search into this data using the Search Service. |

Data |

DATA_W |

X |

Insert a data record to the Store Service. Minimum permission required for the API key of a device pushing data to Live Objects in HTTPS. |

|

Data Processing |

DATA_PROCESSING_R |

X |

X |

Read parameters and status of an event processing rule or a Data decoder. |

Data Processing |

DATA_PROCESSING_W |

X |

Create, modify, disable an event processing rule or a Data decoder. |

|

Bus Config |

BUS_CONFIG_R |

X |

X |

Read config parameters of a FIFO queue. |

Bus Config |

BUS_CONFIG_W |

X |

Create, modify a FIFO queue. |

|

Bus Access |

BUS_R |

X |

X |

Read data on the Live Objects bus. Minimum permission for the API key of an application collecting data on Live Objects in MQTT(s). |

Bus Access |

BUS_W |

X |

X |

Publish data on the Live Objects bus. |

Bus Access |

DEVICE_ACCESS |

X |

X |

Role to set on a Device API key to allow only MQTT Device mode |

Bus Access |

CONNECTOR_ACCESS |

X |

X |

Role to set on a external connector API key to allow only MQTT external connector mode |

Audit Log |

LOGS_R |

X |

X |

Read the logs collected by the Audit Log service. This right allows users to use the Audit Log service as debugging tool. |

5. Device and connectivity

The version 1 of the device APIs (/api/v1/deviceMgt) comes up with a brand new model of devices in Live Objects.

We have worked on providing unified representations whatever the technology that is used to connect the device to Live Objects using IP connectivity (MQTT, HTTP), LoRa® or SMS, while maintaining the access to each specific technology.

5.1. Principles

A device is a generic term that can designate an equipment (sensor, gateway) or an entity observed by equipments (ex: a building, a car).

5.2. Device description

A device description is a set of JSON documents that store a device identity information, a device state information, a metadata (definition, activity, alias) a device connectivity information for each device that you will connect to Live objects.

Each device description has 2 sections to describe the device representation :

-

Device identity.

-

Device interface representation.

5.2.1. Devices representation

5.2.1.1. Device identifier format

A device identity is represented by a unique identifier. This identifier must respect the following format:

urn:lo:nsid:{ns}:{id}

Where:

-

ns: your device identifier "namespace", used to avoid conflicts between various families of identifier

-

id: your device id

Should only contain alphanumeric characters (a-z, A-Z, 0-9) and/or any special characters amongst : - _ and must avoid $ ' ' * # / !| + and must respect the following regular expression:

^urn:lo:nsid:([\w-]{1,128}):([:\w-]{1,128})$ (with max 269 characters).

| If your device is auto-provisioned (first connection), the device identifier namespace is automatically completed according to the "urn:lo:nsid" prefix (if not available) + the set of characters according the previous rule of regular expression. |

5.2.1.2. Device object model

Depending on your connectivity interface, the device object model may have a dedicated "definition" section to describe the parameters of your interface (s).

Device model in Json format

{

"id": "urn:lo:nsid:sensor:temp001",

"name": "mySensor001",

"description": <<some description>>,

"defaultDataStreamId": <<myStreamId>>,

"activityState": <<monitoring the device>>

"tags": ["Lyon", "Test"],

"properties" : {

"manufacturer": <<myManufacturer>>,

"model": <<myModel>>

},

"group": {

"id": <<id>>,

"path": <<myPathId>>

},

"interfaces": [

{

"connector": <<myConnector>>,

"nodeId": <<interface Id>>,

"deviceId": "urn:lo:nsid:sensor:temp001",

"enabled": <<true/false>>,

"status": <<the status of the interface>>,

"definition": {

........to learn more, see the "Device interface representation" section

},

"activity": {},

"capabilities": {

"command": {

"version" : <<versionNumber>>,

"available": <<true/false>>

},

"configuration": {

"available": <<true/false>>

},

"resources": {

"available": <<true/false>>

}

}

}

],

"created": <<date>>,

"updated": <<date>>,

"staticLocation": {

"lat": <<Latitude value>>,

"lon": <<Longitude value>>,

"alt": <<Altitude value>>

}

}Device object model description:

| JSON Params | Description |

|---|---|

id |

device unique identifier (Cf. device identifier) |

description |

Optional. detailed description of the device |

name |

Optional. name of the device |

defaultDataStreamId |

default data stream id. Specify the streamId where the data will be store (Cf. "Manage your data stream" section). |

tags |

Optional. list of additional information used to tag device messages |

properties |

Optional. map of key/value string pairs detailing device properties |

group |

group to which the device belongs. The group is defined by its id and its path |

interfaces |

Optional. list of device network interfaces (Cf. interface object model) |

created |

creation date of the device |

updated |

last update date of the device |

config |

Optional. device configuration |

firmwares |

Deprecated device firmware versions (same value as "resources", available for compatibily reasons) |

resources |

Optional. device resource versions |

activityState |

Optional. device activity state aggregated from the activity processing service, the special state NOT_MONITORED means that the device is not targeted by any activity rule |

staticLocation |

Optional. the static location of device. |

| To avoid data personnal exposure, we strongly recommended to do not add a pesronnal and sensitive information in your tags and properties fields. This data are exposed and accessibles to Live Objects other services and components. |

5.2.1.3. Device interface representation

An interface is a platform access. A device can have no, one or several interfaces, which represent different connectivities that the device could use to communicate with Live Objects. Each interface is associated to a protocol managed by Live Objects connector: LoRa®, SMS or MQTT. For the custom protocols, the devices must connected with external connector interface.

| JSON Params | Description |

|---|---|

connector |

connector identifier |

nodeId |

interface unique identifier |

deviceId |

Optional. device unique identifier |

enabled |

define if the interface is enabled or disabled |

status |

interface status |

definition |

interface definition. The definition depends on connector. |

lastContact |

Optional. lastContact is the last date of the last uplink from the device, in LoRa® connectivity, this field is also updated during a join request sent by the device. |

activity |

interface activity. The activity depends on connector. |

capabilities |

interface capabilities. |

locations |

Optional. list of last interface location. |

created |

registration date of the interface |

updated |

last update date of the interface |

For more information on each connector definition, activity and status, see the appropriate section:

-

LoRa®, see the following section LoRa® connector.

-

SMS, see the following section SMS connector.

-

MQTT, see the following section MQTT connector.

-

External connector, see the following section MQTT External connector.

-

LwM2M connector, see the following section LwM2M connector.

5.2.1.4. Interface status

Each interface has a status field which shows the state of the corresponding interface. The values are the same for all connectors, but each connector sets the status differently. The following table shows which statuses are supported, or will soon be supported by connectors.

Status \ Connector |

|||||

REGISTERED |

☑ |

☑ |

☐ |

☑ |

☑ |

ONLINE |

☐ |

☑ |

☑ |

☑ |

☑ |

OFFLINE |

☐ |

☑ |

☐ |

☑ |

☑ |

SLEEPING |

☐ |

☐ |

☐ |

☐ |

☑ |

CONNECTIVITY_ERROR |

☑ |

☐ |

☐ |

☐ |

☐ |

INITIALIZING |

☑ |

☐ |

☐ |

☐ |

☐ |

INITIALIZED |

☑ |

☐ |

☐ |

☐ |

☐ |

REACTIVATED |

☑ |

☐ |

☐ |

☐ |

☐ |

ACTIVATED |

☑ |

☐ |

☐ |

☐ |

☐ |

DEACTIVATED |

☑ |

☑ |

☑ |

☐ |

☑ |

Each interface has an enabled flag which allows or forbids an interface to connect and communicate with Live Objects. The enabled flag changes the interface’s status.

This flag can be set when creating the interface or updating the interface.

The following table shows a description of each technical value of the interface status.

| Status Value | Description |

|---|---|

REGISTERED |

The device has been registered in the network with the parameters specified when it was created. No uplink data has yet been received by the platform. |

INITIALIZING |

The network received a Join Request from the device |

INITIALIZED |

The network sent a Join Accept to the device |

ACTIVATED |

At least one uplink issued by the device was received by Live Objects (excluding MAC messages) |

DEACTIVATED |

The device has been deactivated in Live Objects. He can no longer communicate on the network (See the paragraph "Deactivate and reactivate a LoRa® device" and see the deactivation of the LwM2M device. |

REACTIVATED |

The device has been reactivated in Live Objects. Not being able to know a priori the state of the device at the time of the reactivation, the state will pass to "Activated" if a Join Request is received, or directly to "Activated" if an uplink is received. |

CONNECTIVITY_ERROR |

This status, rare, is displayed in case of problem on configuration of the equipment in the network. If this status appears, contact your support. |

ONLINE (MQTT) |

The MQTT connection of the device is active |

ONLINE (LwM2M) |

The LwM2M device is registered and active |

SLEEPING (LwM2M) |

The LwM2M device is in sleeping mode but the session is still active. |

OFFLINE (MQTT) |

The device has already connected at least once but its MQTT connection is inactive |

OFFLINE (LwM2M) |

The LwM2M device is deregistred or deactivated. |

ONLINE (SMS) |

The SMS device is activated at Live Objects level |

OFFLINE (SMS) |

The SMS device is deactivated at Live Objects level |

5.2.1.5. Capabilities

Capabilities \ Connector |

|||||

Command |

☑ |

☑ |

☑ |

☑ |

☑ |

Configuration |

☐ |

☑ |

☐ |

☐ |

☑ |

Resource |

☐ |

☑ |

☐ |

☐ |

☑ |

Twin |

☐ |

☐ |

☐ |

☐ |

☑ |

Interface capabilities represent the Live Objects features compatibility.

-

Command : Compatibility with the generic command engine and API

-

Configuration : Compatibility with config update feature. MQTT connectivity only for now

-

Resource : Compatibility with resource update feature. MQTT connectivity only for now

-

Twin : Compatibility with the Live Objects twin service. LwM2M/CoAP connectivity only for now

5.2.1.6. Device static location

The static location is a set of a declared geographical coordinates values (longitude, latitude and altitude). Its section in the device object model must have the following format:

"staticLocation": {

"lat": <<Latitude value>>,

"lon": <<Longitude value>>,

"alt": <<Altitude value>>

}This info is useful for stationary devices, or devices that are not sending location information to Live Objects.

POST /api/v1/deviceMgt/devices/<myDeviceId>

{

"id": "<myDeviceId>",

"description": "Device 123",

"name": "My Device",

"defaultDataStreamId": "MydefaultStream"

"interfaces": [

{

"connector": "mqtt",

"enabled": "true",

"definition": {

"clientId" : "<myDeviceId>",

"encoding" : "myEncoding"

}

}

],

"staticLocation": {

"lat": <<Latitude value>>,

"lon": <<Longitude value>>

}

}5.3. Device management basic

5.3.1. Register a device

5.3.1.1. Request

Endpoint:

POST /api/v1/deviceMgt/devices

HTTP Headers:

X-API-Key: <your API key> Content-Type: application/json Accept: application/json

Body:

| JSON Params | Description |

|---|---|

id |

device unique identifier (Cf. device object model) |

tags |

Optional. (Cf. device object model) |

name |

Optional. (Cf. device object model) |

description |

Optional. (Cf. device object model) |

defaultDataStreamId |

Optional. (Cf. device object model) |

properties |

Optional. (Cf. device object model) |

group |

Optional. (Cf. device object model) |

interfaces |

Optional. (Cf. device object model) |

|

Devices can be registered with one or more chosen interfaces. Currently, you can associate an SMS interface (Cf. register device with an SMS interface example) or a LoRa® interface (Cf. register device with a LoRa® interface example) with the devices. The other supported interfaces, the MQTT and External connector interfaces can be automatically registered during the first MQTT connection. |

Example: Register a device without interface

POST /api/v1/deviceMgt/devices

{

"id": "urn:lo:nsid:sensor:temp001",

"tags": ["Lyon", "Test"],

"name": "mySensor001",

"description": "moisture sensor",

"properties" : {

"manufacturer": "Orange",

"model": "MoistureSensorV3"

},

"group": {

"path": "/france/lyon"

}

}5.3.1.2. Response

HTTP Code:

200 OK

Body:

Error case:

| HTTP Code | Error code | message |

|---|---|---|

400 |

GENERIC_INVALID_PARAMETER_ERROR |

The submitted parameter is invalid. |

403 |

GENERIC_OFFER_DISABLED_ERROR |

The requested service is disabled in your offer settings. Please contact a sales representative. |

403 |

GENERIC_ACTION_FORBIDDEN_ERROR |

You do not have the required permissions to execute this action. |

404 |

DM_GROUP_NOT_FOUND |

Group not found |

409 |

DM_INTERFACE_DUPLICATE |

Interface already exists. Conflict on (connector/nodeId) |

409 |

DM_DEVICE_DUPLICATE |

Conflict on device id |

Example: Register a device without interface

{

"id": "urn:lo:nsid:sensor:temp001",

"description": "moisture sensor",

"name": "mySensor001",

"defaultDataStreamId": "urn:lo:nsid:sensor:temp001",

"tags": ["Lyon", "Test"],

"properties": {

"manufacturer": "Orange",

"model": "MoistureSensorV3"

},

"group": {

"id": "sWyaL2",

"path": "/france/lyon"

},

"created": "2018-02-12T13:29:52.442Z",

"updated": "2018-02-12T13:29:52.442Z"

}5.3.2. List devices

5.3.2.1. Request

Endpoint:

GET /api/v1/deviceMgt/devices

Query parameters:

| Name | Description |

|---|---|

limit |

Optional. maximum number of devices in response. 20 by default. |

offset |

Optional. the number of entries to skip in the results list. 0 by default. |

sort |

Optional. sorting selection. Prefix with '-' for descending order. Supported value: id, name, group, created, updated,interfaces.status, interfaces.enabled, interfaces.lastContact. Example: ["urn","-creationTs"]".. |

id |

Optional. device id |

groupPath |

Optional. groupPath, Supported filters are → exact match : foo, group or subgroups : foo/* |

groupId |

Optional. filter list by groupId |

name |

Optional. device name, Supported filters are → contains : *foo*, end with foo : *foo, start with foo : foo*, exact match : "foo" or foo |

tags |

Optional. filter list by device tags |

connectors |

Optional. filter list by interface connector |

fields |

Optional. fields to return for each device. By default, information returned are id, name, group and tags. Supported value: name, description, group, tags, properties, interfaces, config, firmwares (deprecated), resources, defaultDataStreamId, activityState, created and updated. |

interfaces.nodeId |

Optional. Filter list by nodeId. |

interfaces.status |

Optional. Filter list by interface status. |

interfaces.enabled |

Optional. Filter list by interface enabled state. |

property.{filterName} |

Optional. Multiple filters, Example: devices?property.temperature=25&property.humidity=58… |

filterQuery |

Optional. Device filter expression using RSQL notation. Supported device properties are |

HTTP Headers:

X-API-Key: <your API key> Accept: application/json X-Total-Count: <boolean>

Simple devices list request:

Get name, creation date and group of device having name starting with mySensor sorted by id descending.

GET /api/v1/deviceMgt/devices?name=mySensor*&sort=-id&fields=name,created,group

RSQL advanced devices list request:

Get id field only of devices matching following (RSQL filter url-encoded under) filterQuery: groupPath==/France;tags==demo;connector==lwm2m or (connector==x-connector)

For example, devices having /France as path and demo in tags, and (lwm2m or x-connector) as connector

GET /api/v1/deviceMgt/devices?fields=id&filterQuery=groupPath%3d%3d/France%3btags%3d%3ddemo%3bconnector%3d%3dlwm2m+or+(connector%3d%3dx-connector)

5.3.2.2. Response

HTTP Code:

200 OK

Body: List of device object model

| JSON Params | Description |

|---|---|

id |

device unique identifier |

description |

Optional. detailed description of the device |

name |

Optional. name of the device |

defaultDataStreamId |

Optional. default data stream Id of the device |

tags |

Optional. list of device tags |

properties |

Optional. properties of the device |

group |

Optional. group to which the device belongs |

interfaces |

Optional. list of device’s network interfaces |

created |

Optional. registration date of the device |

updated |

Optional. last update date of the device |

config |

Optional. device configuration |

firmwares |

Deprecated device firmware versions (same value as "resources", available for compatibily reasons) |

resources |

Optional. device resource versions |

activityState |

Optional. device activity state aggregated from the activity processing service, the special state NOT_MONITORED means that the device is not targeted by any activity rule |

Simple devices list response example:

[

{

"id": "urn:lo:nsid:sensor:temp002",

"name": "mySensor002",

"group": {

"id": "root",

"path": "/"

},

"created": "2021-07-01T09:02:40.616Z"

},

{

"id": "urn:lo:nsid:sensor:temp001",

"name": "mySensor001",

"group": {

"id": "sWyaL2",

"path": "/france/lyon"

},

"created": "2021-07-01T09:02:40.616Z"

}

]5.3.3. Get a device

5.3.3.1. Request

Endpoint:

GET /api/v1/deviceMgt/devices/<deviceId>

HTTP Headers:

X-API-Key: <your API key> Accept: application/json

Example:

GET /api/v1/deviceMgt/devices/urn:lo:nsid:mqtt:myTest

5.3.3.2. Response

HTTP Code:

*200 OK*

Body:

Error case:

| HTTP Code | Error code | message |

|---|---|---|

400 |

GENERIC_INVALID_PARAMETER_ERROR |

The submitted parameter is invalid. |

401 |

UNAUTHORIZED |

Authentication failure. |

404 |

DM_DEVICE_NOT_FOUND |

Device not found. |

Example:

{

"id": "urn:lo:nsid:sensor:temp002",

"name": mySensor002",

"description": "This device was auto registered by the connector [mqtt] with the nodeId [urn:lo:nsid:mqtt:myTest]",

"group": {

"id": "root",

"path": "/"

},

"defaultDataStreamId": "urn:lo:nsid:sensor:temp002",

"created": "2021-07-01T09:02:40.616Z",

"updated": "2021-07-01T09:04:46.752Z",

"activityState": "NOT_MONITORED",

"interfaces": [

{

"connector": "mqtt",

"nodeId": "urn:lo:nsid:mqtt:myTest",

"enabled": true,

"status": "OFFLINE",

"lastContact": "2021-08-13T09:05:06.751Z",

"capabilities": {

"configuration": {

"available": false

},

"command": {

"available": false

},

"resource": {

"available": false

},

"twin": {

"available": false

}

},

"activity": {

"apiKeyId": "60508c314ca6b82d6d605b1e",

"mqttVersion": 4,

"mqttUsername": "json+device",

"mqttTimeout": 60,

"remoteAddress": "82.13.102.175/27659",

"lastSessionStartTime": "2021-08-13T09:03:21.158Z",

"lastSessionEndTime": "2021-08-13T09:04:06.750Z"

},

"created": "2021-07-01T09:02:40.615Z",

"updated": "2021-07-01T09:04:46.752Z"

}

]

}5.3.4. Delete device

5.3.4.1. Request

Endpoint:

DELETE /api/v1/deviceMgt/devices/<deviceId>

HTTP Headers:

X-API-Key: <your API key> Accept: application/json

Example:

DELETE /api/v1/deviceMgt/devices/urn:lo:nsid:sensor:temp001

5.3.4.2. Response

HTTP Code:

204 NO CONTENT

Error case:

| HTTP Code | Error code | message |

|---|---|---|

400 |

GENERIC_INVALID_PARAMETER_ERROR |

The submitted parameter is invalid. |

404 |

DM_DEVICE_NOT_FOUND |

Device not found |

5.4. Device interface management

5.4.1. Add an interface to a registered device

5.4.1.1. Request

Endpoint:

POST /api/v1/deviceMgt/devices/<deviceId>/interfaces

HTTP Headers:

X-API-Key: <your API key> Content-Type: application/json Accept: application/json

Body:

| JSON Params | Description |

|---|---|

connector |

connector id |

enabled |

define if the interface is enabled or disabled |

definition |

interface definition. The definition depends on connector (Cf. SMS interface definition or LoRa® interface definition). |

|

Currently, you can only create an SMS or an LoRa® interface, MQTT interface will be auto-provisionned at the first connection. |

Example: Create an SMS interface

POST /api/v1/deviceMgt/devices/urn:lo:nsid:sensor:temp001/interfaces

{

"connector": "sms",

"enabled": true,

"definition": {

"msisdn": "33601201201"

}

}5.4.1.2. Response

HTTP Code:

200 OK

Body:

Error case:

| HTTP Code | Error code | message |

|---|---|---|

400 |

GENERIC_INVALID_PARAMETER_ERROR |

The submitted parameter is invalid. |

403 |

GENERIC_OFFER_DISABLED_ERROR |

The requested service is disabled in your offer settings. Please contact a sales representative. |

404 |

DM_DEVICE_NOT_FOUND |

Device not found |

404 |

DM_CONNECTOR_UNAVAILABLE |

Connector not found or unavailable |

409 |

DM_INTERFACE_DUPLICATE |

Interface already exists. Conflict on (connector/nodeId) |

Example:

{

"connector": "sms",

"nodeId": "33601201201",

"deviceId": "urn:lo:nsid:sensor:temp001",

"enabled": true,

"status": "ONLINE",

"definition": {

"msisdn": "33601201201"

},

"activity": {},

"capabilities": {

"command": {

"version" : 1,

"available": true

},

"configuration": {

"available": false

},

"resources": {

"available": false

}

},

"created": "2018-03-02T15:54:33.943Z",

"updated": "2018-03-02T15:54:33.943Z"

}5.4.2. List device interfaces

5.4.2.1. Request

Endpoint:

GET /api/v1/deviceMgt/devices/<deviceId>/interfaces

HTTP Headers:

X-API-Key: <your API key> Accept: application/json

Example:

GET /api/v1/deviceMgt/devices/urn:lo:nsid:sensor:temp001/interfaces

5.4.2.2. Response

HTTP Code:

200 OK

Body:

List of Interface object model

Error case:

| HTTP Code | Error code | message |

|---|---|---|

400 |

GENERIC_INVALID_PARAMETER_ERROR |

The submitted parameter is invalid. |

404 |

DM_DEVICE_NOT_FOUND |

Device not found. |

Example:

[

{

"connector": "sms",

"nodeId": "33601201201",

"enabled": true,

"status": "ONLINE",

"activity": {},

"definition" : {

"msisdn" : "33601201201",

"serverPhoneNumber" : "12345"

},

"capabilities": {

"command": {

"version" : 1,

"available": true

},

"configuration": {

"available": false

},

"resources": {

"available": false

}

}

},

{

"connector": "mqtt",

"nodeId": "urn:lo:nsid:sensor:temp001",

"enabled": true,

"status": "ONLINE",

"lastContact": "2018-03-02T15:57:23.772Z",

"activity" : {

"apiKeyId" : "6c2c569d91b5f174f60bd73d",

"mqttVersion" : 4,

"mqttUsername" : "json+device",

"mqttTimeout" : 60,

"remoteAddress" : "217.0.0.0/44341",

"lastSessionStartTime" : "2019-07-24T15:09:22.560Z",

"lastSessionEndTime" : "2019-07-24T16:20:37.333Z",

"security" : {

"secured": true,

"protocol": "TLSv1.2",

"cipher": "TLS_ECDHE_RSA_WITH_AES_128_GCM_SHA256",

"clientCertAuthentication": true,

"sniHostname": "mqtt.liveobjects.orange-business.com"

}

},

"capabilities": {

"command": {

"version" : 1,

"available": true ("false" if the device is "OFFLINE" or has not suscribed to the topic "dev/cmd")

},

"configuration": {

"version" : 1,

"available": true ("false" if the device is "OFFLINE" or has not suscribed to the topic "dev/cfg")

},

"resources": {

"version" : 1,

"available": true ("false" if the device is "OFFLINE" or has not suscribed to the topic "dev/rsc/upd")

}

},

"firmwares" : { (same value as "resources", available for compatibility reasons)

"MyFW" : "1.0.2"

},

"resources" : {

"MyFW" : "1.0.2"

}

}

]5.4.3. Get interface details

5.4.3.1. Request

Endpoint:

GET /api/v1/deviceMgt/devices/<deviceId>/interfaces/<interfaceId>

The interfaceId must respect the following format {connector}:{nodeId}.

HTTP Headers:

X-API-Key: <your API key> Accept: application/json

Example:

GET /api/v1/deviceMgt/devices/urn:lo:nsid:sensor:temp001/interfaces/sms:33601201201

5.4.3.2. Response

HTTP Code:

200 OK

Body:

Error case:

| HTTP Code | Error code | message |

|---|---|---|

403 |

GENERIC_OFFER_DISABLED_ERROR |

The requested service is disabled in your offer settings. Please contact a sales representative. |

404 |

DM_CONNECTOR_UNAVAILABLE |

Connector not found or unavailable |

404 |

DM_INTERFACE_NOT_FOUND |

Interface not found |

404 |

DM_DEVICE_NOT_FOUND |

Device not found |

Example:

{

"connector": "sms",

"nodeId": "33601201201",

"deviceId": "urn:lo:nsid:sensor:temp001",

"enabled": true,

"status": "ONLINE",

"definition": {

"msisdn": "33601201201"

},

"activity": {

"lastUplink": {

"timestamp": "2018-03-05T10:43:46.268Z",

"serverPhoneNumber": "20259"

}

},

"capabilities": {

"command": {

"version" : 1,

"available": true

},

"configuration": {

"available": false

},

"resources": {

"available": false

}

},

"created": "2018-03-05T10:20:06.404Z",

"updated": "2018-03-05T10:20:06.408Z"

}5.4.4. Update an interface

5.4.4.1. Request

Endpoint:

PATCH /api/v1/deviceMgt/devices/<deviceId>/interfaces/<interfaceId>

The interfaceId must respect the following format {connector}:{nodeId}.

HTTP Headers:

X-API-Key: <your API key> Content-Type: application/json Accept: application/json

Body:

| JSON Params | Description |

|---|---|

deviceId |

Optional. new device identifier |

enabled |

Optional. define if the interface is enabled or disabled |

definition |

Optional. new interface definition |

Example:

PATCH /api/v1/deviceMgt/devices/urn:lo:nsid:sensor:temp001/interfaces/sms:33601201201

{

"deviceId": "urn:lo:nsid:sensor:temp002",

"enabled": "false",

"definition": {

"encoding": "myDecoder"

}

}5.4.4.2. Response

HTTP Code:

200 OK

Body:

Error case:

| HTTP Code | Error code | message |

|---|---|---|

404 |

DM_INTERFACE_NOT_FOUND |

Interface not found |

404 |

DM_DEVICE_NOT_FOUND |

Device not found |

Example:

{

"connector": "sms",

"nodeId": "33601201201",

"deviceId": "urn:lo:nsid:sensor:temp002",

"enabled": false,

"status": "ONLINE",

"definition": {

"msisdn": "33601201201",

"encoding": "myDecoder"

},

"activity": {

"lastUplink": {

"timestamp": "2018-03-05T10:43:46.268Z",

"serverPhoneNumber": "20259"

}

},

"capabilities": {

"command": {

"version" : 1,

"available": true

},

"configuration": {

"available": false

},

"resources": {

"available": false

}

},

"created": "2018-03-05T10:20:06.404Z",

"updated": "2018-03-05T13:51:09.312Z"

}5.4.5. Delete an interface

5.4.5.1. Request

Endpoint:

DELETE /api/v1/deviceMgt/devices/<deviceId>/interfaces/<interfaceId>

The interfaceId must respect the following format {connector}:{nodeId}.

HTTP Headers:

X-API-Key: <your API key> Accept: application/json

Example:

DELETE /api/v1/deviceMgt/devices/urn:lo:nsid:sensor:temp001/interfaces/sms:33601201201

5.4.5.2. Response

HTTP Code:

204 NO CONTENT

Error case:

| HTTP Code | Error code | message |

|---|---|---|

403 |

GENERIC_OFFER_DISABLED_ERROR |

The requested service is disabled in your offer settings. Please contact a sales representative. |

404 |

DM_CONNECTOR_UNAVAILABLE |

Connector not found or unavailable |

404 |

DM_INTERFACE_NOT_FOUND |

Interface not found |

404 |

DM_DEVICE_NOT_FOUND |

Device not found |

5.5. Connectivity

5.5.1. LoRa® connector

5.5.1.1. Definitions

devEUI |

The global end-device ID of the interface (for more information, see the chapter 6.2.1 of lora-alliance.org/wp-content/uploads/2020/11/lorawan1.0.3.pdf). |

appEUI |

The global application ID of the interface (for more information, see the chapter 6.1.2 of lora-alliance.org/wp-content/uploads/2020/11/lorawan1.0.3.pdf). |

appKey |

The application key of the interface (for more information, see the chapter 6.2.2 of lora-alliance.org/wp-content/uploads/2020/11/lorawan1.0.3.pdf). |

activationType |

OTAA: Over The Air Activation. |

profile |

profile of the Interface which represents the Class (A or C). Can be specific for an Interface (ex. LoRaMote devices) or generic (ex. LoRaWAN/DemonstratorClasseA or LoRaWAN/DemonstratorClasseC). |

encoding |

Optional. encoding type of the binary payload sent by the interface, the decoder must be registered first (Cf. "Decoding service" section). |

connectivityOptions |

connectivity options used for the interface. Supported options are ackUl and location. |

connectivityPlan |

connectivity plan to use for the interface. |

Example:

{

"devEUI": "0101010210101010",

"profile": "Generic_classA_RX2SF9",

"activationType": "OTAA",

"appEUI": "9879876546543211",

"appKey": "11223344556677889988776655443322",

"connectivityOptions" : {

"ackUl" : true,

"location" : false

},

"connectivityPlan" : "orange-cs/CP_Basic"

}|

Important Notice : Some features are only available if you have subscribed to the corresponding offer, so you may have the rights set on your tenant but no access to some features because these features are not activated on your tenant account (check the tenant offer). If an option is applied to a device, it will be effective only if the option is allowed for the tenant. If both connectivityOptions and connectivityPlan are set, connectivityPlan will be selected. connectivityPlan should be used preferably at connectivityOptions but at least one of two shall be defined. connectivityPlan could be selected among list returned by the API LIST Connectivity plans . |

5.5.1.2. Activity

| JSON Params | Description |

|---|---|

lastActivationTs |

Optional. last activation date of the interface |

lastDeactivationTs |

Optional. last deactivation date of the interface |

lastSignalLevel |

Optional. last signal level : this value is a signal level computed from last uplink. This value is the same as the one showed in the data message (Lora message signalLevel). |

avgSignalLevel |

Optional. average signal level : provides a level of the signal during the last uplinks transmitted by the device. The value is between 1 and 5 is computed from uplinks history (PER+SNR+ESP quality indicators aggregate). On frame counter reset, the uplinks history and average signal level are reset. |

lastBatteryLevel |

Optional. last battery level (for more information, see the chapter 5.5 of lora-alliance.org/wp-content/uploads/2020/11/lorawan1.0.3.pdf). |

lastDlFcnt |

Optional. last downlink frame counter |

lastUlFcnt |

Optional. last uplink frame counter |

Example:

{

"activity": {

"lastActivationTs": "2018-03-19T13:02:13.482Z",

"lastActivationTs": "2018-03-20T14:10:26.231Z",

"lastSignalLevel": 5,

"avgSignalLevel": 4,

"lastBatteryLevel": 54,

"lastDlFcnt": 7,

"lastUlFcnt": 1

}

}5.5.1.3. Status

LoRa® supports the following statuses: REGISTERED, INITIALIZING, INITIALIZED, ACTIVATED, DEACTIVATED, REACTIVATED, CONNECTIVITY_ERROR. The following diagram shows how LoRa® connector sets interfaces' status. The consistency check referred in the diagram is an automatic periodic check that verifies the consistency of information between Live Objets and the LoRa® network provider, it can detect potential problems on an LoRa® interface.

5.5.1.4. Examples

5.5.1.4.1. Register a device with LoRa® interface

For more explanation on device creation, please see Register a device section.

Request:

POST /api/v1/deviceMgt/devices

{

"id": "urn:lo:nsid:lora:0101010210101010",

"tags": ["Lyon", "Test"],

"name": "myLoraSensor",

"description": "device with LoRa interface",

"properties" : {

"manufacturer": "Orange",

"model": "LoraSensor"

},

"interfaces": [

{

"connector": "lora",

"enabled": true,

"definition": {

"devEUI": "0101010210101010",

"profile": "Generic_classA_RX2SF9",

"activationType": "OTAA",

"appEUI": "9879876546543211",

"appKey": "11223344556677889988776655443322",

"connectivityOptions" : {

"ackUl" : true,

"location" : false

},

"connectivityPlan" : "orange-cs/CP_Basic"

}

}

],

"group": {

"id": "sWyaL2",

"path": "/france/lyon"

}

}Response:

200 OK

{

"id": "urn:lo:nsid:lora:0101010210101010",

"name": "myLoraSensor",

"description": "device with LoRa interface",

"tags": [

"Test",

"Lyon"

],

"properties": {

"manufacturer": "Orange",

"model": "LoraSensor"

},

"group": {

"id": "sWyaL2",

"path": "/france/lyon"

},

"interfaces": [

{

"connector": "lora",

"nodeId": "0101010210101010",

"enabled": true,

"status": "REGISTERED",

"definition": {

"devEUI": "0101010210101010",

"profile": "Generic_classA_RX2SF9",

"activationType": "OTAA",

"appEUI": "9879876546543211",

"appKey": "11223344556677889988776655443322",

"connectivityOptions" : {

"ackUl" : true,

"location" : false

},

"connectivityPlan" : "orange-cs/CP_Basic"

}

}

],

"defaultDataStreamId": "urn:lo:nsid:lora:0101010210101010",

"created": "2018-03-06T13:23:37.712Z",

"updated": "2018-03-06T13:23:37.945Z"

}5.5.1.4.2. Add a LoRa® interface to a registered device

For more information on interface addition, please see Add a interface section.

Request:

POST /api/v1/deviceMgt/devices/urn:lo:nsid:sensor:temp001/interfaces

{

"connector": "lora",

"enabled": true,

"definition": {

"devEUI": "0202020220202020",

"profile": "Generic_classA_RX2SF9",

"activationType": "OTAA",

"appEUI": "4573876546543211",

"appKey": "11113344556677889988776655443322",

"connectivityOptions" : {

"ackUl" : true,

"location" : false

},

"connectivityPlan" : "orange-cs/CP_Basic"

}

}Response:

201 CREATED

{

"connector": "lora",

"nodeId": "0202020220202020",

"deviceId": "urn:lo:nsid:sensor:temp001",

"enabled": true,

"status": "REGISTERED",

"definition": {

"devEUI": "0202020220202020",

"activationType": "OTAA",

"profile": "Generic_classA_RX2SF9",

"appEUI": "4573876546543211",

"connectivityPlan" : "orange-cs/CP_Basic"

},

"activity": {},

"created": "2018-03-06T13:37:31.397Z",

"updated": "2018-03-06T13:37:31.397Z"

}5.5.1.4.3. List LoRa® connectivity plans

A connectivity plan defines the interface capabilities and parameters that are needed by the network for access service. These information elements shall be provided by the manufacturer.

5.5.1.4.4. Request

Endpoint:

GET /api/v1/deviceMgt/connectors/lora/connectivities

HTTP Headers:

X-API-Key: <your API key> Accept: application/json

5.5.1.4.5. Response

HTTP Code:

200 OK

Body:

[ {

"id" : "orange-cs/CP_Basic",

"name" : "CP_Basic",

"parameters" : {

"nbTransMax" : 3,

"location" : false,

"ackUl" : false,

"sfMax" : 12,

"sfMin" : 7,

"nbTransMin" : 1

}

}, {

"id" : "orange-cs/CP_ACK",

"name" : "CP_ACK",

"parameters" : {

"nbTransMax" : 3,

"location" : false,

"ackUl" : true,

"sfMax" : 12,

"sfMin" : 7,

"nbTransMin" : 1

}

} ]| HTTP Code | Error code | message |

|---|---|---|

403 |

4030 |

Service is disabled. Please contact your sales entry point. |

5.5.1.4.6. Get LoRa® interfaces profiles

An interface-profile defines the interface capabilities and boot parameters that are needed by the network for access service. These information elements shall be provided by the manufacturer.

The profile of the interface which represents the Class (A or C). Can be specific for an Interface (ex. LoRaMote devices) or generic (ex. LoRaWAN/DemonstratorClasseA or LoRaWAN/DemonstratorClasseC).

5.5.1.4.7. Request

Endpoint:

GET /api/v1/deviceMgt/connectors/lora/profiles

HTTP Headers:

X-API-Key: <your API key> Accept: application/json

5.5.1.4.8. Response

HTTP Code:

200 OK

Body:

[ "JRI", "DP_Generic_AS923_ClassA", "ERCOGENER_EG-IoT", "Sensing_Labs_T_IP68", "ESM 5k ELSYS Class A", "NETVOX_R711_AS923_ClassA", "Connit Pulse", "OCEAN_OBR-L", "ATIM Class A", "Adeunis RF Demonstrator_US_915", "DECENTLAB_DLR2-EU868", "DIGITAL_MATTER_EU868"]Error case:

| HTTP Code | Error code | message |

|---|---|---|

403 |

4030 |

Service is disabled. Please contact your sales entry point. |

5.5.2. SMS connector

5.5.2.1. Definitions

msisdn |

device msisdn. Number uniquely identifying a subscription in a Global System for Mobile communications. |

serverPhoneNumber |

Optional. server phone number. Must be defined in the offer settings. |

encoding |

Optional. name of the decoder that will be used to decode received SMSs, the decoder must be registered first (Cf. "Decoding service" section). |

{

"msisdn": "33601201201",

"serverPhoneNumber": "20259",

"encoding": "myDecoder"

}Msisdn is an international phone number : 6..15 digits starting with country code (ex. '33') and without international prefix (ex. '00').

Dummy examples of phone numbers:

-

Belgium: 32654332211

-

France: 33654332211

-

Spain: 34654332211

-

Romania: 40654332211

-

Slovakia: 421654332211

5.5.2.2. Eligible cellular subscriptions for SMS connectivity

|

To send and receive SMS messages to/from a device using an SMS interface, this device must have a cellular subscription from the following operators :

|

5.5.2.3. Activity

| JSON Params | Description |

|---|---|

lastUplink |

Optional. last uplink date of the interface |

lastDownlink |

Optional. last downlink date of the interface |

lastUplink and lastDownlink have the following format:

| JSON Params | Description |

|---|---|

timestamp |

date of the activity |

serverPhoneNumber |

server phone number used |

Example:

{

"activity": {

"lastUplink" : {

"timestamp" : "2020-09-07T14:50:04.352Z",

"serverPhoneNumber" : "+3320259"

},

"lastDownlink" : {

"timestamp" : "2020-09-07T14:42:24.180Z",

"serverPhoneNumber" : "20259"

}

}

}5.5.2.4. Status

The SMS connector supports the following statuses describes in interface status. When an SMS interface is created, its status is set to ONLINE and it will not change. When an SMS interface is disabled, its status is set to DEACTIVATED.

5.5.2.5. Examples

5.5.2.5.1. Register a device with an SMS interface

For more information on device creation, please see Register a device section.

Request:

POST /api/v1/deviceMgt/devices

{

"id": "urn:lo:nsid:sensor:temp002",

"tags": ["Lyon", "Test"],

"name": "mySensor002",

"description": "moisture sensor",

"properties" : {

"manufacturer": "Orange",

"model": "MoistureSensorV3"

},

"interfaces": [

{

"connector": "sms",

"enabled": true,

"definition": {

"msisdn": "33600000001",

"serverPhoneNumber" : "20259"

}

}

],

"group": {

"id": "sWyaL2",

"path": "/france/lyon"

}

}Response:

200 OK

{

"id": "urn:lo:nsid:sensor:temp002",

"name": "mySensor002",

"description": "moisture sensor",

"tags": [

"Test",

"Lyon"

],

"properties": {

"manufacturer": "Orange",

"model": "MoistureSensorV3"

},

"group": {

"id": "sWyaL2",

"path": "/france/lyon"

},

"interfaces": [

{

"connector": "sms",

"nodeId": "33600000001",

"enabled": true,

"status": "ONLINE",

"definition": {

"msisdn": "33600000001",

"serverPhoneNumber" : "20259"

}

}

],

"defaultDataStreamId": "urn:lo:nsid:sensor:temp002",

"created": "2018-03-06T11:30:42.777Z",

"updated": "2018-03-06T11:30:42.819Z"

}5.5.2.5.2. Add an SMS interface to a registered device

For more information on interface addition and example with SMS interface, please see Add a interface section.

Request:

POST /api/v1/deviceMgt/devices/urn:lo:nsid:sensor:temp001/interfaces

{

"connector": "sms",

"enabled": true,

"definition": {

"msisdn": "33600000001",

"serverPhoneNumber" : "20259"

}

}Response:

201 CREATED

{

"connector": "sms",

"nodeId": "33600000001",

"deviceId": "urn:lo:nsid:sensor:temp001",

"enabled": true,

"status": "ONLINE",

"definition": {

"msisdn": "33600000001",

"serverPhoneNumber" : "20259"

},

"activity": {},

"created": "2018-03-06T13:37:31.397Z",

"updated": "2018-03-06T13:37:31.397Z"

}5.5.3. MQTT connector

For information about MQTT interface and messages that your device can send or receive, please refer to the MQTT device mode.

5.5.3.1. Definition

clientId |

device clientId used in mqtt MQTT device mode. |

encoding |

Optional. name of the decoder that will be used to decode data received from this interface. It will override 'metadata.encoding' value from this device’s data messages. The decoder must be registered first (Cf. "Decoding service" section). |

{

"clientId" : "mydevice_001",

"encoding" : "myEncoding_v1"

}5.5.3.2. Activity

| JSON Params | Description |

|---|---|

apiKeyId |

Optional. id of the API KEY used for the last device connection |

mqttVersion |

Optional. mqtt version used by the device mqtt client |

mqttUsername |

Optional. mqtt username used by the device mqtt client |

mqttTimeout |

Optional. mqtt timeout configured by the device mqtt client |

remoteAddress |

Optional. public IP address of the device mqtt client |

lastSessionStartTime |

Optional. last mqtt session start date |

lastSessionEndTime |

Optional. last mqtt session end date |

security |

security information |

security has the following format:

| JSON Params | Description |

|---|---|

secured |

is (or was) a security protocol used |

protocol |

Optional. security protocol used |

cipher |

Optional. cipher suite used |

clientCertAuthentication |

Optional. is (or was) client certificate authentication used |

sniHostname |

Optional. hostname provided by the Server Name Indication extension |

Example:

{

"activity" : {

"apiKeyId" : "5de8d14085a455f5c8525655",

"mqttVersion" : 4,

"mqttUsername" : "json+device",

"mqttTimeout" : 60,

"remoteAddress" : "217.167.1.65/61214",

"lastSessionStartTime" : "2020-08-18T15:32:33.510Z",

"lastSessionEndTime" : "2020-08-18T15:48:51.488Z",

"security" : {

"secured": true,

"protocol": "TLSv1.2",

"cipher": "TLS_ECDHE_RSA_WITH_AES_128_GCM_SHA256",

"clientCertAuthentication": true,

"sniHostname": "mqtt.liveobjects.orange-business.com"

}

}

}5.5.3.3. Status

MQTT connector supports the ONLINE, OFFLINE, REGISTERED and DEACTIVATED statuses. The following diagram shows how the MQTT connector sets interfaces' status.

5.5.3.4. Examples

5.5.3.4.1. Register a device with an MQTT interface

For more information on device creation, please see Register a device section.

Request:

POST /api/v1/deviceMgt/devices

{

"id": "urn:lo:nsid:mqtt:mydevice_001",

"tags": ["Lyon", "Test"],

"name": "mySensor002",

"description": "moisture sensor",

"properties" : {

"manufacturer": "Orange",

"model": "MoistureSensorV3"

},

"interfaces": [

{

"connector": "mqtt",

"enabled": true,

"definition": {

"clientId" : "mydevice_001"

}

}

],

"group": {

"id": "sWyaL2",

"path": "/france/lyon"

}

}Response:

200 OK

{

"id": "urn:lo:nsid:mqtt:mydevice_001",

"name": "mySensor002",

"description": "moisture sensor",

"tags": [

"Test",

"Lyon"

],

"properties": {

"manufacturer": "Orange",

"model": "MoistureSensorV3"

},

"group": {

"id": "sWyaL2",

"path": "/france/lyon"

},

"interfaces": [

{

"connector": "mqtt",

"nodeId": "mydevice_001",

"enabled": true,

"status": "REGISTERED",

"definition": {

"clientId" : "mydevice_001"

}

}

],

"defaultDataStreamId": "urn:lo:nsid:mqtt:mydevice_001",

"created": "2018-03-06T11:30:42.777Z",

"updated": "2018-03-06T11:30:42.819Z"

}5.5.4. LwM2M/CoAP Connector

Based on the LwM2M protocol, this connector implements the LwM2M connectivity based on the CoAP messaging protocol.

5.5.4.1. LwM2M interface representation

In addition to the unified representation of Live Objects interfaces, the LwM2M interface has specific fields that can provide information specific to LwM2M devices. :

5.5.4.2. Definition

This section contains all security credentials used by the LWM2M device.

"definition": {

"endpointName": "My_Device_End_Point_Name",

"security": {

"mode": "PSK",

"pskInfo": {

"identity": "My_PSK_Identity"

}

},

"bootstrap": {

"managed": "false"

}

}| Json Params | Description |

|---|---|

endpointName |

Unique identifier of the LWM2M interface. |

security.mode |

The security mode. Example: "PSK" for Pre-Shared Key mode. The security mode "PSK" for DTLS Pre-Shared Key is currently the only supported security mode. |

security.pskInfo.identity |

The Pre-Shared key (PSK) identity. |

security.pskInfo.secret |

The Pre-Shared key (PSK) secret. |

bootstrap.managed |

Whether the LiveObjects Bootstrap server is allowed to automatically provision information in the interface definition (e.g. the security section) or not. If enabled, the 'security' section must not be set manually. |

5.5.4.3. Activity

The activity item provides a data on the connectivity as the device is active (or inactive) on the network and its interact with Live Objects connector.

"activity": {

"lwm2mVersion": "1.1",

"remoteAddress": "190.92.12.113",

"remotePort": 59436,

"queueMode": false,

"bindings": [

"U"

],

"lastRegistrationDate": "2022-02-16T09:08:26.995Z",

"registrationLifetime": 300,

"lastUpdateDate": "2022-02-16T09:17:17.301Z",

"lastBootstrapProvisioningDate": "2022-02-16T09:08:23.621Z"

}| Json Params | Description |

|---|---|

lwm2mVersion |

Supported LwM2M protocol version. |

remoteAddress |

Used in the IP connections, the IP adress of the device wich have been registered on the LwM2M Live Objects service. |

remotePort |

Used port. |

lastRegistrationDate |

Last registration date. |

registrationLifetime |

Max duration of registered status of the interface. The lifetime is set by LwM2M device connector, when expires, the device is automatically deregistered and the connector change the interface status to OFFLINE. The value is expressed in seconds and can be announced by the the device during the registering (by default : value = 300 seconds). |

queueMode |

Bolean to set the max of the lifetime timeout before the device go to the sleeping status. This parmeter is set to false, only the the devices with the queuing mode can have the queueMode setted to true. |

bindings |

List of the network/transport protocols binding supported by the device (Only UDP ="U") will be supported until now. |

lastUpdateDate |

Last update date of the LwM2M interface parameters. |

lastBootstrapProvisioningDate |

the last time the device definition was updated by the LiveObjects Bootstrap server (only if bootstrap.managed is true in the interface definition or if it is an auto-provisioned device). |

5.5.4.4. Status

The LwM2M device interface can supports the following statuses :

| Status | Description |

|---|---|

REGISTERED |

The lwm2m interface is registered on LiveObjects, and LwM2M connector is waiting for connection from the device. The Capability TWIN is set to FALSE (device Twin is added at the time as the interface is created) |

ONLINE |

The lwm2m interface is connected to LwM2M connector and is in active listening. The TWIN capability is set to TRUE. |

SLEEPING |

The LwM2M interface is connected to LwM2M connector but is no longer in active listening (PSM = Power Saving Mode, used if QueueMode supported by the device). |

OFFLINE |

The LwM2M interface is disconnected and the LwM2M connector is waiting for the next connection request. The twin capability is set to FALSE. |

DEACTIVATED |

The LwM2M interface was deactivated by the user. |

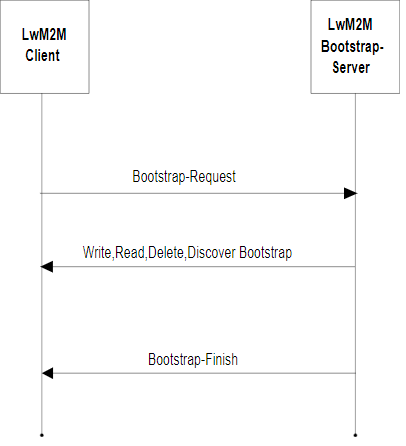

The following diagram shows how LwM2M connector sets interfaces' status.

5.5.4.5. Examples

5.5.4.5.1. Create a LwM2M device